Paleoclimate transfer functions

Back in the day I was given the task of converting a set of programs from FORTRAN 66 to something that could be run on a PC. These programs were designed to use a wide variety of paleoclimatic indicator sets--in this case the relative abundances of 30 species of foraminifera, and known distributions of summer and winter temperature and salinities at the ocean surface over thousands of surface samples, and convert them into a transfer function which related the desired environmental parameters to the foraminiferal abundance data.

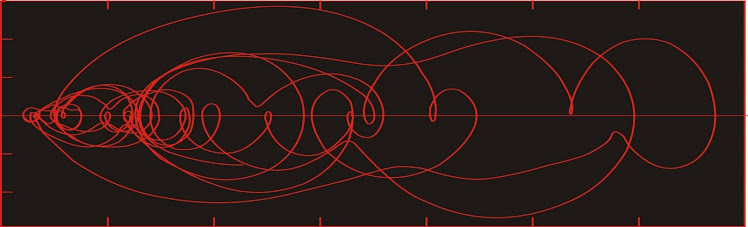

The basic idea is this: Summertime surface temperature (Ts) will be a function of the foraminiferal species abundance. If the abundances measured at a particular point were represented by the probability series p1, p2, p3, . . . , p30 (where Σp = 1), then an expression might be found as follows:

Ts = a1p1 + a2p2 + a3p3 + . . . + a30p30 + a31p1p1 + a32 p1p2 + . . . for a whole lot of terms. Assuming we used all first and second-order terms, we would have to develop 496 parameters in the above equation. That is rather a lot, particularly for the computers we were using when FORTRAN 66 was in vogue (well, okay, it was obsolete then--we were really using FORTRAN 77).

So instead of using all of the foraminiferal species abundance data, we would use factor analysis to simplify the data. Factor analysis is a bit of statistical wizardry which groups species which behave in a similar fashion together into a single factor. We would use the minimum number of factors to represent an acceptable amount of variance--in the case of transfer functions for the North Atlantic we reduced our thirty species to six factors. Our expression for temperature then becomes:

Ts = a1f1 + a2f2 + a3f3 + . . . + a6f6 + a7f1f1 + a8f1f2 + . . . +a27f6f6 + a28. Values for a1 to a28 were found by multiple regression. The PCs of the late 80's were capable of running such programs in a reasonable length of time.

Once all of the surface samples were run for the present day, you could look at the foraminifera found at different levels of a dated core. Let's say you have a sample from a level in a core known to be 100,000 years old. You count the numbers of the different species of foramifera, convert your observations into the factors determined above, and apply the factor loading scores to the transfer function, and you calculate the sea surface temperature at the site of your core 100,000 years ago.

As you might expect, there are a lot of things that can go wrong. The environmental preferences of one or more species might change on geological timescales. Different species might bloom during different times of the year, and this may also change due to evolutionary pressures or some confounding effect like iron seeding in coastal seas oceans due to variability in surface runoff. Nevertheless, the technique has been a mainstay of paleoclimatologists for about forty years.

Approaches to statistical arbitrage

The idea of statistical arbitrage is that there is a particular stock (or commodity or bond or what-have-you) which is mispriced relative to a model based on observations. This model would have the form of a transfer function as described above, but instead of using species abundance data, we use observed values of related financial data, including such things as the price of one or more indices, perhaps the unemployment rate, the inflation rate, the price of gold (or other commodities), and so on. Like in the transfer functions described above, having accurate financial data is critical (as opposed to manipulated "official" data sets).

There are at least two principal approaches to statistical arbitrage--1) concerning individual stocks (or commodities or bonds or whatever and 2) involves matched long-short trades between any number of stocks.

Instead of creating a transfer function between your observations and a particular stock price, you might find the ratio between the prices of two stocks. If the modeled ratio differs from the observed ratio, there may be an arbitrageable opportunity by going long the underpriced stock and short the overpriced one. Your assumption is that the dynamics of the ratio between the two prices has not changed so you plan to take advantage of the reversion to the mean. You don't know whether mean reversion will occur by the overpriced stock falling or the underpriced one rising, but the paired trade should work provided the relationship between the stocks does return to the mean.

Speed comes into play because it is an advantage to be the first market participant to recognize the arbitrage opportunity. As the world is now filled with algorithms seeking these opportunities they tend not to be available for long. One recent exception was in Canadian banks a few years ago, when two of the major banks were thought to be in trouble (and their dividend yields rose quite sharply as share prices fell)--there was a great trade in going long the high-yielding stocks and short the low-yielding stocks (on the assumption that in Canada there will not be a bank failure).

Many institutions calculate highly involved stat-arb positions involving matched long and short positions over large numbers of stocks. It can be difficult for a human to see how all the matches work. For human traders, stat arb probably works best in paired stocks, or a stock vs. an index.

For the record, I don't have any problem with algos pursuing stat arb. It seems to me to be an inherently fair process, and potentially it does improve liquidity if the under/overvaluation is driven by some sort of investor frenzy. I would have a problem if part of the strategy of the algo is to interfere with the access to information of market competitors by creating latency.

Back in the day I was given the task of converting a set of programs from FORTRAN 66 to something that could be run on a PC. These programs were designed to use a wide variety of paleoclimatic indicator sets--in this case the relative abundances of 30 species of foraminifera, and known distributions of summer and winter temperature and salinities at the ocean surface over thousands of surface samples, and convert them into a transfer function which related the desired environmental parameters to the foraminiferal abundance data.

This really brings back memories.

The basic idea is this: Summertime surface temperature (Ts) will be a function of the foraminiferal species abundance. If the abundances measured at a particular point were represented by the probability series p1, p2, p3, . . . , p30 (where Σp = 1), then an expression might be found as follows:

Ts = a1p1 + a2p2 + a3p3 + . . . + a30p30 + a31p1p1 + a32 p1p2 + . . . for a whole lot of terms. Assuming we used all first and second-order terms, we would have to develop 496 parameters in the above equation. That is rather a lot, particularly for the computers we were using when FORTRAN 66 was in vogue (well, okay, it was obsolete then--we were really using FORTRAN 77).

So instead of using all of the foraminiferal species abundance data, we would use factor analysis to simplify the data. Factor analysis is a bit of statistical wizardry which groups species which behave in a similar fashion together into a single factor. We would use the minimum number of factors to represent an acceptable amount of variance--in the case of transfer functions for the North Atlantic we reduced our thirty species to six factors. Our expression for temperature then becomes:

Ts = a1f1 + a2f2 + a3f3 + . . . + a6f6 + a7f1f1 + a8f1f2 + . . . +a27f6f6 + a28. Values for a1 to a28 were found by multiple regression. The PCs of the late 80's were capable of running such programs in a reasonable length of time.

Once all of the surface samples were run for the present day, you could look at the foraminifera found at different levels of a dated core. Let's say you have a sample from a level in a core known to be 100,000 years old. You count the numbers of the different species of foramifera, convert your observations into the factors determined above, and apply the factor loading scores to the transfer function, and you calculate the sea surface temperature at the site of your core 100,000 years ago.

As you might expect, there are a lot of things that can go wrong. The environmental preferences of one or more species might change on geological timescales. Different species might bloom during different times of the year, and this may also change due to evolutionary pressures or some confounding effect like iron seeding in coastal seas oceans due to variability in surface runoff. Nevertheless, the technique has been a mainstay of paleoclimatologists for about forty years.

Approaches to statistical arbitrage

The idea of statistical arbitrage is that there is a particular stock (or commodity or bond or what-have-you) which is mispriced relative to a model based on observations. This model would have the form of a transfer function as described above, but instead of using species abundance data, we use observed values of related financial data, including such things as the price of one or more indices, perhaps the unemployment rate, the inflation rate, the price of gold (or other commodities), and so on. Like in the transfer functions described above, having accurate financial data is critical (as opposed to manipulated "official" data sets).

There are at least two principal approaches to statistical arbitrage--1) concerning individual stocks (or commodities or bonds or whatever and 2) involves matched long-short trades between any number of stocks.

Instead of creating a transfer function between your observations and a particular stock price, you might find the ratio between the prices of two stocks. If the modeled ratio differs from the observed ratio, there may be an arbitrageable opportunity by going long the underpriced stock and short the overpriced one. Your assumption is that the dynamics of the ratio between the two prices has not changed so you plan to take advantage of the reversion to the mean. You don't know whether mean reversion will occur by the overpriced stock falling or the underpriced one rising, but the paired trade should work provided the relationship between the stocks does return to the mean.

Speed comes into play because it is an advantage to be the first market participant to recognize the arbitrage opportunity. As the world is now filled with algorithms seeking these opportunities they tend not to be available for long. One recent exception was in Canadian banks a few years ago, when two of the major banks were thought to be in trouble (and their dividend yields rose quite sharply as share prices fell)--there was a great trade in going long the high-yielding stocks and short the low-yielding stocks (on the assumption that in Canada there will not be a bank failure).

Many institutions calculate highly involved stat-arb positions involving matched long and short positions over large numbers of stocks. It can be difficult for a human to see how all the matches work. For human traders, stat arb probably works best in paired stocks, or a stock vs. an index.

For the record, I don't have any problem with algos pursuing stat arb. It seems to me to be an inherently fair process, and potentially it does improve liquidity if the under/overvaluation is driven by some sort of investor frenzy. I would have a problem if part of the strategy of the algo is to interfere with the access to information of market competitors by creating latency.

No comments:

Post a Comment